How to Safely and Effectively Adopt AI Coding Tools Without Breaking Production

AI coding tools, like Cursor, Windsurf, and Claude Code, aren't going to replace software engineers. But when adopted correctly, they will increase your team's velocity. In this post, I share how to safely adopt AI without introducing tech debt or causing production outages.

If you're reading this post, there's a pretty good chance you're aware of AI coding tools like Cursor (even if you haven't used them), and you've also been seeing a flood of posts about "Vibe Coding." Lately, the discussion seems to lean pretty heavily toward the extremes, which is honestly true about every new technology. Scroll LinkedIn and you'll see posts that claim that AI has killed software engineering, followed by a post where someone says AI can never write production code in an established code base.

TLDR

No, LLMs (which are what all of the current AI coding tools are based on) aren't going to kill software engineering as a career path. Yes, AI coding tools like Cursor, Windsurf, Claude Code, Roo, etc, can make your software engineers more productive writing production code if integrated into your SDLC correctly. Skip straight to my Cursor tips, if that's what you're here for.

A Hype of Extremes

Some folks (usually not software engineers) will happily shout that AI coding tools are the end of software engineers (and traditional software products). They'll say that a doctor can create a full practice management suite (with a patient portal) in a weekend with no coding experience, just on "vibes."

Alternatively, you'll have people (many who haven't even tried the tools) insist that AI coding tools are incapable of doing anything more complex than mockups and quick prototypes. These folks remind me of the people who claim that no one should use IDEs and we should all stick to vim. (Which, as an aside, I use all the time, just not as my main code editor)

So, Which is it?

Like pretty much everything, the reality is somewhere in the middle and awfully nuanced. The fact is, AI coding tools are realistically just another layer of abstraction that will boost developer productivity. The creation of high-level languages like C, enabling software engineers to write in an abstraction instead of hardware-specific assembler, meant people wrote more software. The same is true of every other advancement, from faster processors, more memory, garbage collectors, virtual machines, etc.

When we make software engineers more productive, we universally turn around and ask them to write more software. I've yet to be a part of a single business that didn't wish we could do more, faster. There are always more user problems we could solve, some market change that presents a massive (and time-sensitive) opportunity. The world wants more software, and they want it now (or yesterday, preferably). Making engineers more productive will increase demand for software engineers, not eliminate it.

The AI coding tools on the market can't replace engineers. They're constantly getting better, but no matter what you do, an LLM can't do anything truly novel; it's impossible. A human being can think up novel things and guide an LLM through implementing them. More parameters, larger contexts, and training data won't change that. An LLM will never be AGI. You also need to understand how to implement software to guide an LLM to build software properly. Effectively, we've just moved up part of a layer of abstraction, which is a productivity boost.

Yes, AI Can Write Production Code

Sure, if you put an AI coding tool like Cursor, Windsurf, etc, in the hands of someone who doesn't understand software engineering, they can quickly build something that looks like it works but doesn't hold up to the realities of production. If you provide vague instructions and spam "Accept All Changes," you'll end up with something even you can't continue to support and extend, let alone work on collaboratively as a team.

That's not really saying anything new compared to complaining that someone who watches a YouTube video, imports a bunch of Python libraries, and copies and pastes from StackOverflow will produce a lot of spaghetti code.

The quality of the code you produce is proportional to the thought and rigor that you put into producing the code. This is true no matter what tools you're using, but even more true with AI coding tools. I think of these tools like the super soldier serum from the MCU. If your SDLC allows sloppy, duplicative code with poor coverage, you'll get a lot more of that with tools like Cursor or Windsurf. If your SDLC produces well-designed, well-structured, well-tested code today, then you'll be able to do that faster.

Shore Up Your Foundation

If your SDLC produces well-designed, well-structured, well-tested code today, then adopting AI coding tools will increase your velocity without introducing new risks. While not specific to AI coding, I absolutely believe in the guardrails of a well-designed CI/CD pipeline and think it's even more critical when introducing tools like Cursor, Windsurf, Claude Code, etc, into your toolkit.

Before widely rolling out AI coding tools to your team, I highly recommend reviewing your CI/CD process to ensure you're starting from a strong foundation. These are my personal recommendations; pick and choose what works best for your team.

- Static Code Analysis

- Good unit test coverage (that tests the right things)

- Private dependency mirror

- Dependency analysis (security and license checks)

- Strong instrumentation (logs, traces, and metrics with SLOs and alerting)

- Feature flags

- Small incremental changes, deployed to production frequently

A good proxy to think about for how ready your team is to adopt AI coding tools is: how easy is it for a new engineer to learn your codebase? Because, effectively, your AI tool is a new engineer that's starting over learning your code every time you use it.

So, How Do You Safely Adopt AI Tools Without Breaking Production?

Here are my practical tips for adopting AI coding tools like Cursor, Windsurf, Claude Code, etc., while maintaining high quality and consistency and avoiding major regressions. My tips are specific to Cursor since that's become my tool of choice, but the general principles should apply to any tool.

Document, document, document

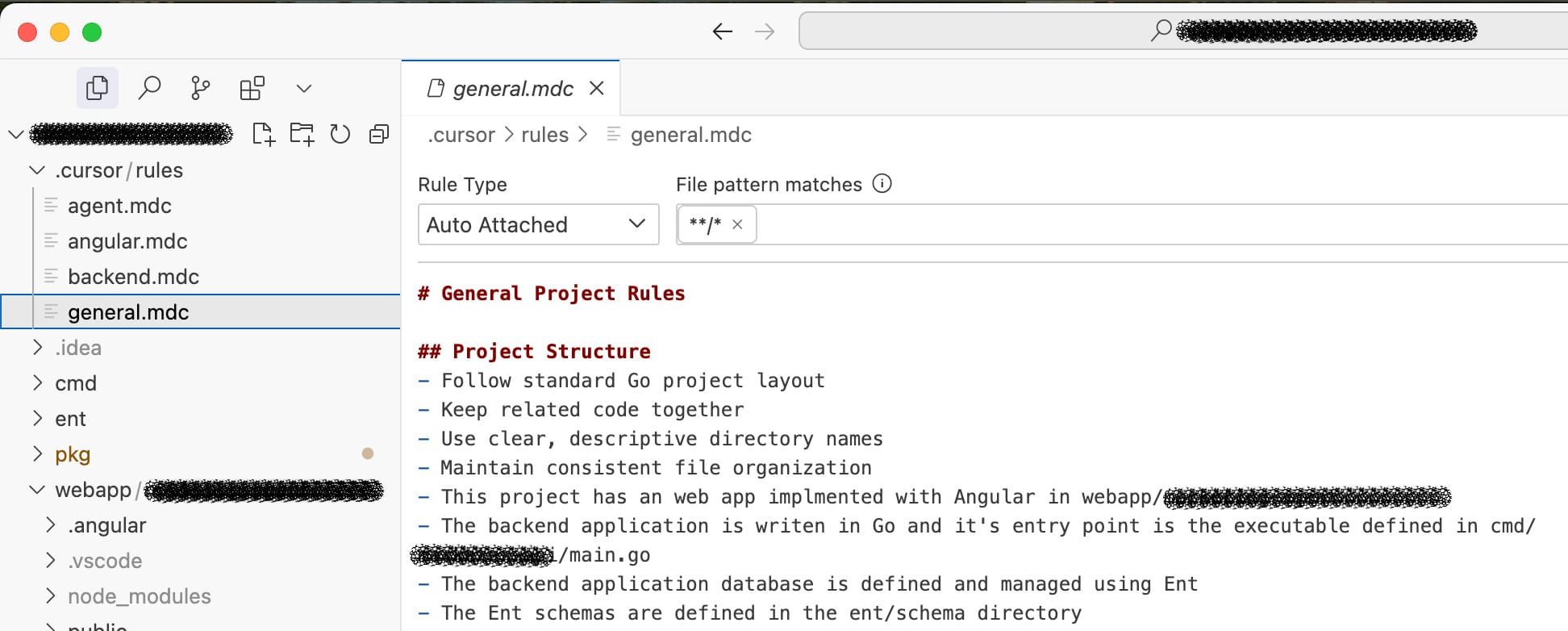

Cursor, specifically, has a feature called Rules For AI, which allows you to define markdown files of rules that can automatically be included in your prompt context (either globally or conditionally using path matching globs). Use these markdown files to document your project structure, the dependencies you use, style rules, and provide code examples for established patterns.

If you've ever done any woodwork, you're probably familiar with using a reference piece. If you need multiple pieces of wood the same length, you measure and cut one piece that you use to measure all the rest. Using a reference piece ensures every piece you cut is the same length without needing to measure every piece individually. If you always used the previous piece you cut to measure the next one, you'd risk having minor variations amplified over time until your last piece differs wildly from the first. Adding code examples of the best implementation of each pattern from your codebase to the Cursor rules makes them your reference piece.

Create global Cursor rules that define your project structure, outline the major components, frameworks/libraries, and general coding style. You should also create specific rules files corresponding to each major component of your project using path pattern matching. I also heavily recommend including READMEs throughout your project, defining how to build and test specific components.

Cursor rules live within your project, so they can (and should) be checked in and shared across your team. This ensures everyone is working from the same baseline to help get consistent results across team members.

Treat Cursor Like a Junior Team Member

You're working with the agent on the implementation, not handing it all off. You wouldn't hand off a full user story to a new junior team member and expect them to go build a full implementation without your input. Don't expect Cursor to either. You'll get the most consistent results with the highest quality if you spend the time and effort thinking through how you would implement the feature, what patterns to follow, and where to make the changes.

This doesn't need to be to the level of pseudo code, but describe what components you'd build, where you'd implement them, and what APIs/packages to use. If you tell Cursor the implementation you want, you'll actually get that implementation. If you just describe the functionality you want, you shouldn't be surprised when the implementation isn't what you expected.

Sometimes you don't know the best way to implement something. That's fine, treat it like an experiment. Describe the functionality, see what Cursor produces, ask it to explain the code, and iterate. You definitely shouldn't be afraid of rejecting/reverting the changes and trying again.

Work Small, With a Clean Context

One criticism I consistently see as proof that AI coding tools aren't ready for production is that the agents will "run away" and start touching unrelated areas of the codebase, introducing breaking changes or huge commits. Often, this is because of a combination of either asking for large, broad-reaching changes in a single prompt and/or using a single long-running context for multiple unrelated changes. As the adage goes, "How do you eat an elephant? One bite at a time."

You wouldn't approach a problem by trying to implement everything at once, and you shouldn't ask Cursor to do that either. Break your implementation up into small, discrete implementation steps and have Cursor implement each step one at a time. This will keep Cursor focused and avoid the pattern of spiraling outwards, touching unrelated code, and implementing features you didn't ask for.

I also recommend starting every distinct change with a fresh chat to keep your context size small. LLMs tend to summarize context as it grows to keep the size constrained. Even when everything stays in context, keep in mind that the LLM is effectively performing a semantic search against that context. The more unrelated information you have in context, the more likely it is that the LLM will use the wrong data as a basis for its generation and hallucinate. Keeping your context small and focused will produce the best results.

Know When to Bail Out

Sometimes, no matter how you change your prompt, your AI coding tool just won't get it right. Remember, you're still the software engineer, spinning your wheels trying to get the LLM to do what you want, can easily waste all the efficiency gains you get from using it. Sometimes it's just better to step back and implement something yourself. It's also a good idea to then update your Cursor rules and READMEs with the example for the future.

For some reason, Cursor consistently fails to implement background polling for status endpoints of async APIs in my projects. No matter what, it always gets the RxJS pipelines wrong and either polls once and exits or polls infinitely regardless of the response. I know that when I'm implementing that pattern, I let Cursor stub it out, and then I finish the logic. I've also had instances where Cursor just couldn't get some responsive styling correct when all it needed was one extra class.

When Cursor (or any other AI tool) works well, lean into it and reap the benefits. When you can't get it to do what you want, roll up your sleeves and do it yourself. Don't waste time fighting the machine.

Software Engineering is Dead, Long Live Software Engineering

LLMs (and thus AI coding tools like Cursor, Windsurf, Claude Code, etc) aren't going to replace software engineers. Most of the criticisms of these tools are true. They can generate huge sweeping change sets, import packages that don't exist, implement features you didn't ask for, and break and delete all your tests. However, if used effectively, they will make your team more effective. At the end of the day, they're all still just tools; you can't blame your tools for how you use them.