Getting Started with the OpenAI Responses API Using Go: An Introductory Guide

The OpenAI Responses API simplifies building agentic AI systems, and Go (Golang) is a perfect fit for integrating it in production. This guide walks through real, working examples using the official OpenAI Go SDK, covering state management, tool calling, structured output, file search, and more.

Jump straight to the examples if that's what you're here for.

I feel like Go is a perfect fit for building software that integrates with LLMs, and that's especially true with the new OpenAI Responses API. Go's concurrency model is ideal for building high-throughput, IO-bound applications, such as waiting for API requests to LLMs to complete. If your software integrates with a third-party LLM like OpenAI, your tech stack choices can't do anything to increase the speed of individual requests. However, you can control how many concurrent user requests you can handle with the least resources. To me, this is where Go shines in building AI-powered applications.

With that in mind, it's surprising to me that there aren't a lot of resources online about building LLM applications with Go. There especially aren't a lot of resources about using the new Responses API that OpenAI launched in March 2025. Previously, the Go community had to rely on third-party packages that lagged behind the API or roll their own direct integration with the API. OpenAI released an official openai-go SDK in July of 2024, but the API Reference on their website only shows curl, JavaScript, Python, and C# examples. While the package seems to be staying up to date with the latest API changes, its documentation is still focused on the Chat Completions API.

In this guide, I'll walk through the new Responses API from OpenAI and provide code examples for using it with the official openai-go Go SDK. In future posts, I'll provide hands-on examples for building real-world applications with the Responses API. For now, consider this post an introduction to using the API with Go.

What is the OpenAI Responses API?

OpenAI released its Responses API in March 2025 as a new API designed to simplify building AI agents. The Responses API is meant to provide a similar level of simplicity as the Chat Completions API while providing core primitives that make creating agents easier. The design of this new API is meant to take the core learnings from the OpenAI Assistants API and provide a simpler interface for building AI agents. With the introduction of Responses, OpenAI has also announced that they will be deprecating the Assistants API with a sunset date likely in the first half of 2026.

What About Chat Completions?

The Responses API is going to replace the Assistants API. OpenAI has made it clear, however, that they plan to continue to support and enhance the Chat Completions API with no end-of-life planned. That being said, their documentation and announcements also make it clear that the future is the Responses API and recommend that if you're starting new projects, you should plan to use the Responses API.

The Responses API is meant to be a superset of the Chat Completions API. It supports all the capabilities of Chat Completions and has a similarly simple API design, but it builds in primitives like built-in retrieval tools and state management that make it easier to build AI agents. The Responses API provides some abstractions for agent building blocks you previously had to implement yourself.

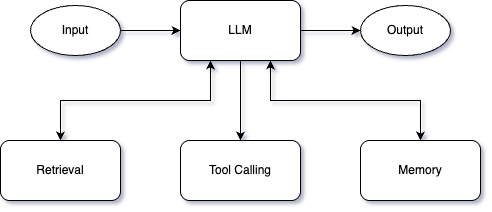

The Building Blocks of AI Agents

At their core, AI agents are built around LLMs augmented with capabilities for retrieval, tool calling, and memory. These capabilities are already supported by current LLM models. The new Responses API doesn't introduce new capabilities for these building blocks. What it does do is simplify the process of integrating these capabilities into your projects by supporting them in a single API call instead of building them yourself. These abstractions do mean you're using OpenAI's specific implementations, which may or may not be what you want. But, having an out-of-the-box option within the API is nice if you don't necessarily need a custom RAG or memory management. Anthropic has a great blog post here on building effective AI agents that goes into a lot more detail.

Retrieval: Fetching external data to augment your prompt and provide additional request-specific context to the LLM. Typically, this is using something like vector search against a knowledge base.

Tool Calling: By providing the LLM with the definition of various functions and their input parameters, we can allow it to request the execution of these functions with specific arguments. The LLM doesn't actually execute the functions; your code needs to execute the functions and return the results to the LLM in a follow-up request.

Memory: Since AI agents are built around multiple prompts, such as calling a tool and processing the results to generate a response, we need a way to maintain state across multiple prompts. Typically, this would involve providing a chain of interleaved user and assistant messages with each new prompt so that the LLM has the message history.

How Does the Responses API Make Building Agents Easier?

The Responses API provides built-in mechanisms to leverage OpenAI services for retrieval, tool calling, and memory without implementing them yourself.

The Responses API introduces a built-in tool called file_search that allows the LLM to query against your Vector Stores to retrieve information to augment the prompt. This retrieval happens automatically without any extra API calls. Just include the file_search tool in your tools list, and the LLM can perform retrieval transparently.

The Chat Completions API also has tool calling capability. What's unique to the Responses API is the addition of multiple built-in tools like the file_search tool we just discussed. The Responses API supports file_search, web_search_preview, and computer_use tools. Chat Completions can also use web_search_preview, but it will always perform a web search before responding to your prompts, whereas Responses can selectively use it as a tool.

Finally, the Responses API introduces the PreviousResponseID parameter. The API can optionally store (enabled by default) your messages, and you can pass the ID of the previous response in each message. This allows you to use OpenAI to store and manage your message history instead of manually managing state in your code.

Using the Official OpenAI Go SDK with the Responses API

In July 2024, OpenAI released the openai-go package as an official SDK for Go that supports all of its APIs in an officially maintained package. While the package was updated to support the Responses API, its README and examples are still mostly focused on the Chat Completions API.

The following are simple examples of using the Responses API in Go: set up, state management, tool calling, web search, vector stores and file search, input files and images, and structured output.

Getting Started

Getting started with the Responses API in Go is pretty straightforward. On the surface, basically, you just need to have an API key, create a new client using that API key, and use that client to call .Responses.New() with some basic parameters to invoke the API.

oaiClient := openai.NewClient(

option.WithAPIKey(os.Getenv("OPENAI_API_KEY")),

)

params := responses.ResponseNewParams{

Model: openai.ChatModelGPT4o,

Temperature: openai.Float(0.7),

MaxOutputTokens: openai.Int(512),

Input: responses.ResponseNewParamsInputUnion{

OfString: openai.String("What were the original design goals for the Go programming language?"),

},

}

resp, err := oaiClient.Responses.New(ctx, params)

if err != nil {

log.Fatalln(err.Error())

}

log.Println(resp.OutputText())Using the OpenAI Responses API with Go

This simple example, though, does introduce some of the peculiarities of using the OpenAI SDK with Go (or any other strongly typed language). The OpenAI APIs are polymorphic. Many of the attributes might accept both strings for simple use cases or one or more complex types. To represent this polymorphism in Go, the SDK creates Union types with multiple fields prefixed with Of. Only one of the Of fields may be populated at a time; all others must be left empty.

In the case of the Input field of our ResponseNewParams type, the ResponseNewParamsInputUnion can have either the OfString field populated for a single simple text message, or the OfInputItemList field populated (itself a Union type) to provide a list of messages of multiple types.

State Management

Using the PreviousResponseID field of the ResponseNewParams we can use OpenAI as memory for our agents, letting us continue using the same context without manually providing message history with every API call.

params := responses.ResponseNewParams{

Model: openai.ChatModelGPT4o,

Temperature: openai.Float(0.7),

MaxOutputTokens: openai.Int(10240),

Input: responses.ResponseNewParamsInputUnion{

OfString: openai.String("What were the original design goals for the Go programming language?"),

},

Store: openai.Bool(true), // This is already TRUE by default

}

resp, err := oaiClient.Responses.New(ctx, params)

if err != nil {

log.Fatalln(err.Error())

}

log.Println(resp.OutputText())

params.PreviousResponseID = openai.String(resp.ID)

params.Input = responses.ResponseNewParamsInputUnion{

OfString: openai.String("Who created it?"),

}

resp, err = oaiClient.Responses.New(ctx, params)

if err != nil {

log.Fatalln(err.Error())

}

log.Println(resp.OutputText())Managing LLM State with the Responses API in Go

To use this functionality, you must enable storing messages in OpenAI. This is enabled by default for the Responses API. You can disable it by setting Store to false if you so choose.

By setting PreviousResponseID to the ID of our last response, the API has context to know that "it" in the message "Who created it?" refers to the Go programming language.

Tool Calling

The Responses API makes it easy to allow your agent to execute functions both to take external action and to provide enriched context for completing a request. The LLM model you're calling with the Responses API doesn't directly execute any of the tools. Instead, you create tool definitions that describe the tool and its input arguments, and provide a list of available tools when you invoke the LLM.

If the model wants to invoke a tool, the response will contain the tool name and arguments. Your code should invoke the code associated with the tool and include the tool results in the following invocation, along with the previous response ID to continue the context.

First, we need to define our tools so that our model knows their purpose and how to call them.

// getStockTool defines the OpenAI tool for getting a single Stock by ID

var getStockTool = responses.ToolUnionParam{

OfFunction: &responses.FunctionToolParam{

Name: "get_stock_price",

Description: openai.String("The get_stock_price tool retrieves the current price of a single stock by it's ticker symbol"),

Parameters: openai.FunctionParameters{

"type": "object",

"properties": map[string]interface{}{

"symbol": map[string]string{

"type": "string",

"description": "The ticker symbol of the stock to retrieve",

},

},

"required": []string{"symbol"},

},

},

}We'll also define a struct for unmarshalling the input arguments from our model and the actual code to implement (a mock of) the tool function.

// GetStockPriceArgs represents the arguments for the get_stock_price function

type GetStockPriceArgs struct {

StockSymbol string `json:"symbol"`

}

// GetStockPrice is the implementation of the get_stock_price function

func GetStockPrice(ctx context.Context, args []byte) (string, error) {

var getArgs GetStockPriceArgs

if err := json.Unmarshal(args, &getArgs); err != nil {

return "", fmt.Errorf("failed to parse get_stock_price arguments: %w", err)

}

// Validate the stock symbol

if strings.TrimSpace(getArgs.StockSymbol) == "" {

return "", fmt.Errorf("stock symbol is required")

}

// Return a static placeholder

return "$198.53 USD", nil

}For convenience, we'll create a list of our tool definitions (in this case just one) to make it easy to pass them to multiple LLM invocations as well as wrap a switch statement for calling the appropriate Go functions for each tool the model may invoke.

// agentTools is the list of all tools available to the agent

var agentTools = []responses.ToolUnionParam{

getStockTool,

}

// processToolCall handles a tool call from the OpenAI API

func processToolCall(ctx context.Context, toolCall responses.ResponseFunctionToolCall) (string, error) {

switch toolCall.Name {

case "get_stock_price":

return GetStockPrice(ctx, []byte(toolCall.Arguments))

default:

return "", fmt.Errorf("unknown tool: %s", toolCall.Name)

}

}Finally, we'll update our agent code to invoke our processToolCall function for any tools call in the response to our first LLM invocation, and add the results as OfFunctionCallOutput to the params.Input before making an API call to get our final output.

params := responses.ResponseNewParams{

Model: openai.ChatModelGPT4o,

Temperature: openai.Float(0.7),

MaxOutputTokens: openai.Int(512),

Tools: agentTools,

Input: responses.ResponseNewParamsInputUnion{

OfString: openai.String("What's the current stock price for Apple?"),

},

}

resp, err := oaiClient.Responses.New(ctx, params)

if err != nil {

log.Fatalln(err.Error())

}

params.PreviousResponseID = openai.String(resp.ID)

params.Input = responses.ResponseNewParamsInputUnion{}

for _, output := range resp.Output {

if output.Type == "function_call" {

toolCall := output.AsFunctionCall()

result, err := processToolCall(ctx, toolCall)

if err != nil {

params.Input.OfInputItemList = append(params.Input.OfInputItemList, responses.ResponseInputItemParamOfFunctionCallOutput(toolCall.CallID, err.Error()))

} else {

params.Input.OfInputItemList = append(params.Input.OfInputItemList, responses.ResponseInputItemParamOfFunctionCallOutput(toolCall.CallID, result))

}

}

}

// No tools calls made, we already have our final response

if len(params.Input.OfInputItemList) == 0 {

log.Println(resp.OutputText())

return

}

// Make a final call with our tools results and no tools to get the final output

params.Tools = nil

resp, err = oaiClient.Responses.New(ctx, params)

if err != nil {

log.Fatalln(err.Error())

}

log.Println(resp.OutputText())Tool Calling in the OpenAI Responses API with Go

Web Search

Adding web search capabilities to our agents with the Responses API is as simple as adding the built-in tool definition to our tools list. With this tool enabled, the LLM can optionally invoke web_search_preview to retrieve search results and incorporate them into the LLM response automatically.

// agentTools is the list of all tools available to the agent

var agentTools = []responses.ToolUnionParam{

{OfWebSearch: &responses.WebSearchToolParam{Type: "web_search_preview"}},

getStockTool,

}Enabling Web Search with the OpenAI Responses API in Go

Vector Stores and File Search

Using the Responses API, we can give our agents retrieval capabilities by including the built-in file_search tool. This tool allows the model to execute semantic searches against OpenAI Vector Stores and use the retrieved files as context in the LLM response. Doing so assumes that we've also either added files to a Vector Store using either the API or the OpenAI Dashboard.

To add files to a Vector Store by API, first, you need to upload the file to OpenAI using the Files API. The uploaded file name and content type rely on implementing Name() and ContentType() methods on the provided io.Reader. If you do not provide these methods, the name and content type will default to "anonymous_file" and "application/octet-stream." OpenAI provides an openai.File() convenience function to wrap your Reader with these methods.

fileReader, err := os.Open("document.pdf")

if err != nil {

log.Fatalln(err.Error())

}

inputFile := openai.File(fileReader, "resume.pdf", "application/pdf")

storedFile, err := oaiClient.Files.New(ctx, openai.FileNewParams{

File: inputFile,

Purpose: openai.FilePurposeUserData,

})

if err != nil {

log.Fatalln(fmt.Sprintf("error uploading file to OpenAI: %s", err.Error()))

return

}

log.Println(storedFile.ID)Using the OpenAI Files API with Go

Once we've uploaded our file, we can attach it to a Vector Store. Attaching the file will automatically chunk it (if necessary), create embeddings, and store them in the Vector Store. We can optionally associate metadata attributes with the file when attaching it to the vector store to allow us to apply filters to the semantic search when using the file_search tool in our agent.

_, err = oaiClient.VectorStores.Files.New(ctx, os.Getenv("VECTOR_STORE_ID"), openai.VectorStoreFileNewParams{

FileID: storedFile.ID,

Attributes: map[string]openai.VectorStoreFileNewParamsAttributeUnion{

"file_type": {OfString: openai.String("application/pdf")},

},

})

if err != nil {

log.Fatalln("failed to associate file with Vector Store", err.Error())

}Using OpenAI Vector Stores with Go

Enabling file search capabilities in our agents is, once again, as simple as adding the file_search tool to our tools list. In this case, the tool has more parameters we can configure, but the principle is the same. The VectorStoreIDs field is the only required field. We can optionally apply filters to the search using the Filters field building a FileSearchToolFiltersUnionParam.

var agentTools = []responses.ToolUnionParam{

{

OfFileSearch: &responses.FileSearchToolParam{

VectorStoreIDs: []string{os.Getenv("VECTOR_STORE_ID")},

MaxNumResults: openai.Int(10),

Filters: responses.FileSearchToolFiltersUnionParam{

OfComparisonFilter: &shared.ComparisonFilterParam{

Key: "file_type",

Type: "eq",

Value: shared.ComparisonFilterValueUnionParam{

OfString: openai.String("application/pdf"),

},

},

},

Type: "file_search",

},

},

{OfWebSearch: &responses.WebSearchToolParam{Type: "web_search_preview"}},

getStockTool,

}Using the OpenAI Responses API File Search Tool with Go

Input Files and Images

In addition to being able to give our agents access to Vector Stores with the file_search tool, we can also include files and images as input to our Responses API requests. The Input field of the ResponseNewParams type is a union type ResponseNewParamsInputUnion. So far in most of our examples, we've been using the simple OfString option to just provide a single input text message.

The ResponseNewParamsInputUnion type also supports OfInputItemList which allows you to provide an array of input items of multiple types. In addition to text input, it also supports file input and image input.

Using input files and images is essentially a convenience wrapper around OpenAI's computer vision APIs. The Responses API will leverage computer vision to parse the provided files and extract text and visual elements like objects, shapes, colors, and textures, and then pass that information as context to the LLM with the rest of your prompt.

Considering this, the only supported file type for input files is PDF. Input images support PNG, JPEG, WEBP, and non-animated GIF.

The first step to using input files with the Responses API is to upload the file to OpenAI using the Files API, the same as we did for using the file_search tool. Images can also be uploaded this way, or optionally, you could provide a URL for the image if it's already publicly hosted.

After that, you just need to reference the file or image in OfInputItemList.

params.Input = responses.ResponseNewParamsInputUnion{

OfInputItemList: responses.ResponseInputParam{

responses.ResponseInputItemParamOfMessage(

responses.ResponseInputMessageContentListParam{

responses.ResponseInputContentUnionParam{

OfInputFile: &responses.ResponseInputFileParam{

FileID: openai.String(storedFile.ID),

Type: "input_file",

},

},

responses.ResponseInputContentUnionParam{

OfInputText: &responses.ResponseInputTextParam{

Text: "Provide a one paragraph summary of the provided document.",

Type: "input_text",

},

},

},

"user",

),

},

}Providing Input Files and Images to the OpenAI Responses API with Go

Enforcing Structured Output with JSON Schema

Instead of providing output in plain text, using structured output, you can enforce the model to produce output in compliance with an exact JSON Schema. This enables you to ensure the responses unmarshal correctly into your Go types and can even enforce compliance with rules around value options. Using structured output will produce much more consistent results than trying to specify JSON formats in system or developer prompts.

The first step to using structured output in your prompts is to add JSON Schema annotations to your Go types.

type TIOBEIndex struct {

IndexVersion string `json:"index_version" jsonschema_description:"The index version the results are from in MMMM yyyy format"`

Rankings []ProgrammingLanguageRanking `json:"rankings" jsonschema_description:"The ordered ranking results"`

}

type ProgrammingLanguageRanking struct {

Name string `json:"name" jsonschema_description:"Programming language name"`

CurrentRanking int `json:"current_ranking" jsonschema_description:"Where the language ranks in the current index"`

PriorYearRanking int `json:"prior_year_ranking" jsonschema_description:"Where the language ranked in the index 12 months prior"`

Rating float64 `json:"rating" jsonschema_description:"The popularity share for the programming language"`

RatingChange float64 `json:"rating_change" jsonschema_description:"The year over year ratings change"`

}To pass our JSON Schema to the Responses API, we need it represented as a map[string]interface{}. We can create a helper function to use reflection to convert our struct tags to a JSON Schema at compile time.

var TIOBEIndexSchema = GenerateSchema[TIOBEIndex]()

func GenerateSchema[T any]() map[string]interface{} {

// Structured Outputs uses a subset of JSON schema

// These flags are necessary to comply with the subset

reflector := jsonschema.Reflector{

AllowAdditionalProperties: false,

DoNotReference: true,

}

var v T

schema := reflector.Reflect(v)

schemaJson, err := schema.MarshalJSON()

if err != nil {

panic(err)

}

var schemaObj map[string]interface{}

err = json.Unmarshal(schemaJson, &schemaObj)

if err != nil {

panic(err)

}

return schemaObj

}We can instruct the prompt to return results in the format specified by our JSON Schema using the Text field of the ResponseNewParams.

params := responses.ResponseNewParams{

Text: responses.ResponseTextConfigParam{

Format: responses.ResponseFormatTextConfigUnionParam{

OfJSONSchema: &responses.ResponseFormatTextJSONSchemaConfigParam{

Name: "TIOBE-Index",

Schema: TIOBEIndexSchema,

Strict: openai.Bool(true),

Description: openai.String("JSON Schema for the TIOBE Programming Community Index results"),

Type: "json_schema",

},

},

},

Model: openai.ChatModelGPT4o,

Temperature: openai.Float(0.7),

MaxOutputTokens: openai.Int(2048),

Tools: agentTools,

Input: responses.ResponseNewParamsInputUnion{

OfString: openai.String("Please provide me with the top ten results from the latest TIOBE index"),

},

}Enforcing Structured Output with JSON Schema Using the OpenAI Responses API in Go

In Summary

The Responses API is the newest API from OpenAI, designed to provide the simplicity of the Chat Completions API but with built-in primitives to make developing agentic AI incredibly simple. While it can provide a "batteries included" experience for everything you need to start building agent systems, it also makes it easy to swap out the OpenAI state management, retrieval, or built-in tools for your own if your use cases require finer-grained control.

OpenAI has taken steps to make Go a first-class supported language for developing AI applications with the openai-go package, which already supports the Responses API. Unfortunately, the documentation is still somewhat lacking, but hopefully, this introductory guide will be enough to get you started building software with the Responses API in Go.

The full code samples for this post can be found here on my GitHub.

Have you built AI systems in Go? Have you used the new Responses API? Have any general feedback? I'd love to hear your thoughts in the comments!